“If you want to save your child from polio, you can pray or you can inoculate. Choose science.” — Carl Sagan, The Demon-Haunted World

Far be it for me to provide scientific illiterates like Donald Trump and Robert F. Kennedy, Jr. with an appreciation of vaccine science. For them and their admirers, political commitments preclude an understanding of the human immune system, how vaccines work, and how scientists go about creating them and demonstrating their safety and efficacy. So let’s take a different approach. Let’s try to cultivate an appreciation of vaccine science that is strictly historical and begins with the Revolutionary War. In this way, perhaps, vaccine skeptics can edge toward an appreciation of the foundational role of vaccination and its precursor, inoculation, to American greatness in the pre-Trump era.

_____________________

Dear Anti-Vaxxers:

Did you know that at the outset of the Revolutionary War, inoculation against smallpox – the insertion of pus from the scabs and pustules of smallpox sufferers into arms of healthy soldiers to induce typically mild attacks of smallpox – was a crucial instrument of strategic advantage for British and Continental forces alike? When inoculated British troops came into contact with healthy Continental soldiers and noncombatants, that is, the latter frequently contracted smallpox in its unattenuated, occasionally lethal form. The only defense against disablement-by-smallpox, it turned out, was inoculation, since inoculated soldiers and civilians, after recovery, were immunized against smallpox in both its deadly and attenuated forms.

Did you know that when George Washington realized that overcoming smallpox was crucial to winning the War of Independence, he indeed mandated the inoculation of the entire Continental Army? His order of 5 February 1777 also sent a clear message to all 13 colonies: American governments – local, state, and national – were obligated to “protect public health by providing broad access to inoculation.”[1]

Did you know that when Benjamin Waterhouse began shipping Edward Jenner’s smallpox vaccine to America in 1800, George Washington, John Adams, and Thomas Jefferson hailed it as the greatest discovery of modern medicine? When Jefferson became president in 1801, he pledged to introduce the vaccine to the American public because “it will be a great service indeed rendered to human nature to strike off the catalogue of its evils so great a one as the smallpox.”

Did you know that Jefferson’s successor, James Madison, signed into law in 1813 “An Act to Encourage Vaccination”? And did you know that among its provisions was the requirement that the U.S. postal service “carry mail containing vaccine materials free of charge.”[2]

Did you know that during the Civil War, Union and Confederate Armies were so desperate to vaccinate their troops against smallpox that they had their doctors cooperate in harvesting “vaccine matter” from the lymph of heathy children and infants, especially the offspring of the formerly enslaved?[3]

Did you know that when the Civil War ended, doctors from North and South joined forces to achieve a better understanding of smallpox vaccination methods?[4] They believed an epidemiological understanding of effective vaccination was a shared mission in the service of the re-united nation.

Did you know that in 1893 New York State legislators passed a law requiring public schools to deny enrollment to any child who could not present proof of vaccination, and that the law was extended to private and parochial schools via the Jones-Tallett amendment of 1915?[5] And did you know that the legislators’ commitment to vaccination for all children was reaffirmed a half century later, when Title XIX of the Social Security Act of 1965 mandated “the right of every American child to receive comprehensive pediatric care, including vaccinations.”[6]

Two 13-year-old classmates exposed to the same strain of smallpox at the same time in their classroom in Leicester, England in 1901. One was vaccinated against smallpox in infancy. The other was not.

Did you know that throughout the 19th century, diphtheria was “the dreaded killer that stalked young children”?[7] It was an upper-respiratory inflammation of such severity that it gave rise to a “pseudomembrane” that covered the pharynx and larynx and led to death by asphyxiation. Then, in the early 1890s, Emile Roux and his team at the Pasteur Institute discovered that horses not only withstood repeated inoculation with live diphtheria bacteria, but their blood, purified into an injectable serum, both restored infected children (and adults) to health and provided healthy kids with short-term immunity. No sooner did the serum become commercially available in 1895 than the U.S. death rate among hospitalized diphtheria patients was cut in half – an astonishing fact for the time. By 1913, when the “Shick test” permitted on-the-spot testing for diphtheria, public health nurses and doctors discovered that 30% of NYC school children tested positive for the disease. Injections of serum saved the vast majority and immunized their healthy classmates. New York’s program of diphtheria immunization was copied by municipalities throughout the country. In the early 1930s, diphtheria serum gave way to a long-lasting toxoid vaccine, and in the 1940s, given in combination with pertussis and tetanus vaccines (DBT), diphtheria, “the plague among children” (Noah Webster), became a horror of the past.

A ghostly Skeleton, representing diphtheria, reaches out to strangle a sick child. Watercolor by Richard Tennant Cooper (1885–1957), commissioned by Henry S. Wellcome c. 1912 and now in the Wellcome Collection

Did you know that in Jacobson v. Massachusetts, a landmark decision of 1905, the U.S. Supreme Court affirmed the constitutionality of compulsory vaccination laws? And then, in 1922, in Zucht v. King, the Court stated that “no constitutional right was infringed by excluding unvaccinated children from school.” The decision was written by Louis Brandeis.[8]

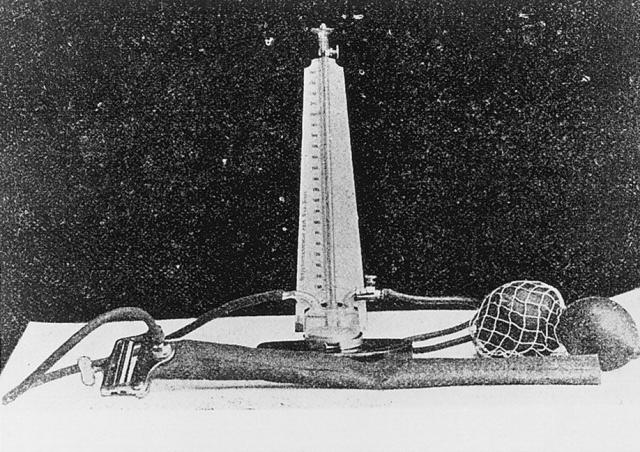

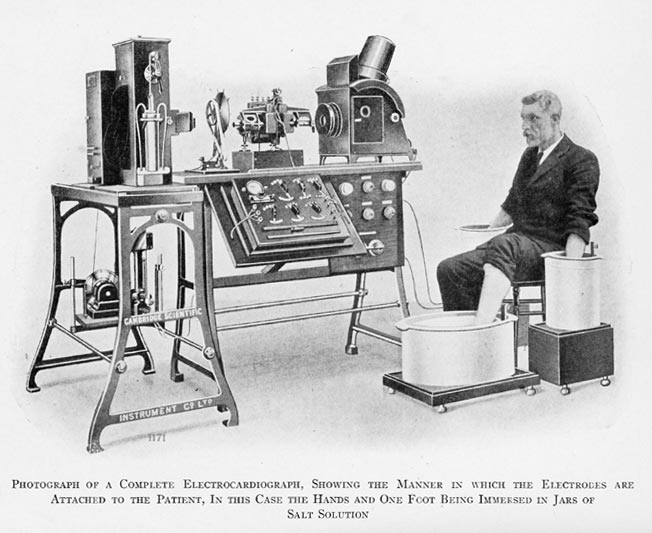

Did you know that in 1909 the U.S. Army made typhoid vaccination compulsory for all soldiers, and the requirement reduced the typhoid rate among troops from 243 per 100,000 in 1909 to 4.4 per 100,000 in three years?[9] When America entered WWI in 1917, troops sailing to France had to be vaccinated. Those who had not received their shots stateside received them on arriving at their camps. Vaccination was not negotiable. The obligation to live and fight for the nation trumped the freedom to contract typhoid, suffer, and possibly die.

Did you know that in the mid-1950s, when Cold War tensions peaked, mass polio vaccination was such a global imperative that it brought together the United States and Soviet Union? In 1956, with the KGB in tow, two leading Russian virologists journeyed to Albert Sabin’s laboratory in Cincinnati Children’s Hospital, while Sabin in turn flew to Moscow to continue the brainstorming. The short-term result was mass trials that confirmed with finality the safety and efficacy of the Sabin vaccine, while bringing its benefits to 10 million Russian school children and several million young Russian adults.[10] The long-term result was the Global Polio Eradication Initiative that began in 1988 and eradicated polio transmission everywhere in the world except Afghanistan and Pakistan.

Did you know that following the development of a freeze-dried smallpox vaccine by Soviet scientists in 1958, Soviet Deputy Health Minister Viktor Zhdanov and American public health epidemiologist Donald Henderson jointly waged a 10-year international campaign to raise enough money to make the vaccine available world-wide?[11] The result of their campaign, in partnership with WHO, was the elimination of smallpox by 1977.[12]

Did you know that in 1963 a severe outbreak of rubella (German measles) led the U.S. Congress to approve the “Early and Periodic Screening, Diagnosis, and Treatment” amendments to Title XIX of the Social Security Act of 1965? The amendments mandated the right of every American child to comprehensive pediatric care, including vaccinations.[13]

___________________

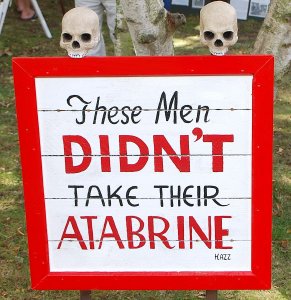

Now, in 2025, a segment of the population has reclaimed the mindset of antebellum America, when the Founding Fathers’ belief that medical progress safeguarded democracy gave way to something far less enlightened: the belief that everyone can be his or her own doctor. Sadly, what the historian Joseph Kett termed the Dark Age of American medicine[14] has been revived among those newly skeptical of vaccination, and especially resistant to compulsory vaccination of children. In its place, they proffer a contemporary variant of the anti-elitist cry of the Jacksonian era: Every man his own doctor; every man his own remedies. Transposed to the early 20th century, the Jacksonian cry resurfaced as resistance to smallpox vaccination. To the anti-vaxxers of the time, the vaccine that, in its inoculatory form, helped win the Revolutionary War, was state-sanctioned trespassing on a person’s body.[15] Now, in the wake of the coronavirus pandemic of 2020, the body has been politicized yet again.

In the case of President Donald Trump and Secretary of Health and Human Services Robert Kennedy, Jr., scientific ignorance of breathtaking proportions carries the prescientific regression back to ancient times. When Plague, in the form of coronavirus, returned to America in 2020, legions of Trump followers followed the lead of a president whose understanding of viral infection followed the Galenic belief that whole-body states require whole-body remedies. Disinfectants like Chlorox, he announced to the nation, kill microbes when we wipe our countertops with it. Why then, he mused, can’t we destroy the coronavirus by injecting bleach into our veins? What bleach does to healthy tissue, blood chemistry, and internal organs – these are questions an inquiring 8th grader might ask her teacher. But they could not occur to a medieval physician or a boastfully ignorant President. In Trump-world, as I observed elsewhere,[16] “there is no possibility of weighing the pros and cons of specific treatments for specific ailments (read: different types of infection, local and systemic). The concept of immunological specificity is literally unthinkable.” As to ingestion of hydroxychloroquine tablets, another touted Trump remedy for coronavirus, “More Deaths, No Benefit” begins the VA Virus Study reporting on Trump’s preferred Covid-19 treatment put forth by Trump.[17]

Trump’s HSS Secretary, Robert Kennedy, Jr. would not be among the inquiring 8th graders. When coronavirus reached America, Trump at least followed a medieval script. Kennedy Jr., encased in two decades of anti-vaccine claptrap, did not need a script. He simply absorbed coronavirus into an ongoing narrative of fabrication, misinformation, and bizarre conspiracy theories calculated to scare people away from vaccination.

As HHS Secretary, Kennedy Jr.’s mission has been to complexify access to coronavirus vaccines. Most recently, he directed the CDC to rescind its recommendation of Covid-19 vaccination for pregnant women and healthy young children. The triumph of scientists in creating safe genetic RNA vaccines could not dislodge the medieval mindset and paranoid delusions that have long been his stock in trade. No, Mr. Secretary, Covid-19 was not engineered to attack Caucasians and African Americans while sparing Ashkenazi Jews and the Chinese. No, Covid-19 vaccines were not created to effect governmental control via implanted microchips. No, vaccines do not cause autism. No, Wi-Fi is not linked to cancer. No, anti-depressants do not lead to school shootings. No, pharmaceutical firms are not conspiring to poison children to make money.

During the Black Death, 14th-century Flagellants roamed the streets of continental Europe, whipping themselves in a frenzy of self-mutilation that left them lacerated if not dead. Their goal was to placate a wrathful God who had breathed down, literally, the poisonous vapors of Plague. What they did, in fact, was leave behind an infectious stew of blood, tissue, and entrails that brought Plague to local villagers. Kennedy, Jr. speaks out and showers listeners with verbal effluvia that induces them to forego vaccination and other scientifically grounded safeguards against disease. Health-wise, he is a Flagellant, spewing forth misinformation that puts listeners and their children at heightened risk for Covid-19 and a cluster of infectious diseases long vanquished by vaccine science.

Does Kennedy, Jr. really believe that everything we have learned about the human immune system since the late 18th century is bogus, and that children who once died from smallpox, cholera, yellow fever, diphtheria, pertussis, typhoid, typhus, tetanus, and polio are still dying in droves, now from the vaccines they receive to protect them? Does he believe the increase in life expectancy in the U.S. from 47 in 1900 to 77 in 2021 has nothing to do with vaccination? Does he believe that the elimination of smallpox and polio from North America has nothing to do with vaccination? Does he believe it a fluke of nature that the last yellow fever epidemic in America was in 1905, and that typhoid fever and diphtheria now victimize only unvaccinated American travelers who contract them abroad?

The fact that we have a President comfortably at home in the Galenic world, and an HSS Secretary whose web of delusional beliefs land him in the nether region of the Twilight Zone doesn’t mean the rest of us must follow suit. We are citizens of the 21st century and entitled to reap the life-sustaining benefits of 250 years of sustained medical progress – progress that has taken us to the doorstep of epical advances in disease prevention, management, and cure wrought by genetic medicine.[18] My urgent plea is carpe tuum tempus – seize the era in which you live. Seize the knowledge that medical science has provided. In a word: Get all your vaccines and make doubly sure your children get theirs. Do your part to Make America Sane Again.

______________________

[1] Andrew M. Wehrman, The Contagion of Liberty: The Politics of Smallpox in the American Revolution. Baltimore (Johns Hopkins Univ. Press, 2022), p. 220.

[2] Dan Liebowitz, “Smallpox Vaccination: An Early Start of Modern Medicine in America, ” J. Community Hosp. Intern. Med. Perspect., 7:61-63, 2017 (https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5463674).

[3] Jim Downs, Maladies of Empire: How Colonialism, Slavery, and War Transformed Medicine (Cambridge: Harvard Univ. Press, 2021), pp. 143-145.

[4] Ibid., p. 150.

[5] James Colgrove, Epidemic City: The Politics of Public Health in New York (NY: Russell Sage Foundation, 2011), pp. 185-187.

[6] Louis Galambos, with Jane Eliot Sewell, Networks of Innovation: Vaccine Development at Merck, Sharp & Dohme, and Mulford, 1895-1995 (Cambridge: Cambridge Univ. Press, 1995), pp. 106-107.

[7] Judith Sealander, The Failed Century of the Child: Governing America’s Young in the Twentieth Century (Cambridge: Cambridge Univ. Press, 2003), p. 326.

[8] Colgrove, op. cit., pp. 170, 190.

[9] Carol R. Byerly, Mosquito Warrior: Yellow Fever, Public Health, and the Forgotten Career of General William C. Gorgas (Tuscaloosa: Univ. Alabama Press, 2024), p, 226.

[10] For elaboration, see Paul E. Stepansky, “Vaccinating Across Enemy Lines,” Medicine, Health, & History, 16 April 2021 (https://adoseofhistory.com/2021/04/16/vaccinating-across-enemy-lines).

[12] Peter J. Hotez, “Vaccine Diplomacy: Historical Perspective and Future Directions,” PLoS Neglected Trop. Dis. 8:e380810.1371, 2014; Peter J. Hotez, “Russian-United States Vaccine Science: Preserving the Legacy,” PLoS Neglected Trop. Dis., 11:e0005320,2017.

[13] Galombo & Sewell, op. cit., pp. 106-107.

[14] Joseph F. Kett, The Formation of the American Medical Profession: The Role of Institutions, 1780-1860 (New Haven: Yale Univ. Press, 1968), p. vii. I invoke the Jacksonian Dark Age of American medicine in a different context in Paul E. Stepansky, Psychoanalysis at the Margins (NY: Other Press, 2009), pp. 283-285.

[15] Nadav Davidovitch, “Negotiating Dissent: Homeopathy and Anti-Vaccinationism At the Turn of the Twentieth Century,” in Robert D. Johnston, ed., The Politics of Healing: Histories of Alternative Medicine in Twentieth-Century Medicine (New York: Routledge, 2004), pp. 23-24.

[16] Paul E. Stepansky, “Covid-19 and Trump’s Medieval Turn of Mind,” Medicine, Health, and History, 19 August 2020 (https://adoseofhistory.com/?s=Trump%27s+Medieval+turn)

[17] Marilyn Marchone, “More deaths, no benefit from malaria drug in VA virus study,” AP News, 21 April 2020 (https://apnews.com/article/malaria-donald-trump-us-news-ap-top-news-virus-outbreak-a5077c7227b8eb8b0dc23423c0bbe2b2).

[18] For a masterful introduction to the history of genetic medicine, including the discovery and applications of CRISPR gene editing, the development of RNA genetic vaccines for Covid-19, and the frontier of genetically engineered disease management, see Walter Issacson, Code Breaker: Jennifer Doudna , Gene Editing, and the Future of the Human Race (NY: Simon & Schuster, 2017). No less illuminating is Doudna’s own account of her pathway to CRISPR research and evolving understanding of the therapeutic potential of CRISPR-based gene editing, Jennifer A. Doudna & Samuel H. Sternberg, A Crack in Creation: Gene Editing and the Unthinkable Power to Control Evolution, esp. chs. 1 & 2 (Boston: Houghton Mifflin Harcourt, 2017). Far more limited in scope but very worthwhile in illustrating contemporary genetic diagnosis and treatment is Am Amgis Ashley, The Genome Odyssey: Medical Mysteries and the Incredible Quest to Solve Them (Milwaukee: Porchlight, 2021).

Copyright © 2025 by Paul E. Stepansky. All rights reserved. The author kindly requests that educators using his blog essays in their courses and seminars let him know via info[at]keynote-books.com.