“. . . the children’s population of this century has been submitted progressively as never before to the merciless routine of the ‘cold steel’ of the hypodermic needle.” —Karl E. Kassowitz, “Psychodynamic Reactions of Children to the Use of Hypodermic Needles” (1958)

Of course, like so much medical technology, injection by hypodermic needle has a prehistory dating back to the ancient Romans, who used metal syringes with disk plungers for enemas and nasal injections. Seventeenth- and eighteenth-century physicians extended the sites of entry to the vagina and rectum, using syringes of metal, pewter, ivory, and wood. Christopher Wren, the Oxford astronomer and architect, introduced intravenous injection in 1657, when he inserted a quill into the patient’s exposed vein and pumped in water, opium, or a purgative (laxative).

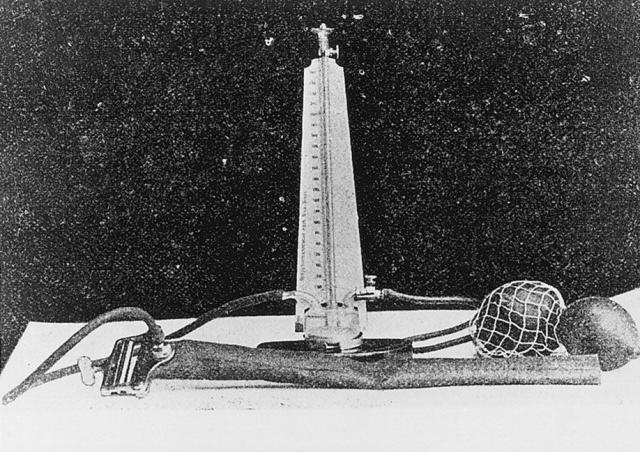

But, like so much medical technology, things only get interesting in the nineteenth century. In the first half of the century, the prehistory of needle injection includes the work of G. V. Lafargue, a French physician from the commune of St. Emilion. He treated neuralgic (nerve) pain – his own included – by penetrating the skin with a vaccination lancet dipped in morphine and later by inserting solid morphine pellets under the skin through a large needle hole. In 1844, the Irish physician Francis Rynd undertook injection by making a small incision in the skin and inserting a fine cannula (tube), letting gravity guide the medication to its intended site.[1]

The leap to a prototype of the modern syringe, in which a glass piston pushes medicine through a metal or glass barrel that ends in a hollow-pointed needle, occurred on two national fronts in 1853. In Scotland, Alexander Wood, secretary of Edinburgh’s Royal College of Physicians, injected morphine solution directly into his patients in the hope of dulling their neuralgias. There was a minor innovation and a major one. Wood used sherry wine as his solvent, believing it would prove less irritating to the skin than alcohol and less likely to rust his instrument than water. And then the breakthrough: He administered the liquid morphine through a piston-equipped syringe that ended in a pointed needle. Near the end of the needle, on one side, was an opening through which medicine could be released when an aperture on the outer tube was rotated into alignment with the opening. It was designed and made by the London instrument maker Daniel Ferguson, whose “elegant little syringes,” as Wood described them, were intended to inject iron percholoride (a blood-clotting agent, or coagulant) into skin lesions and birthmarks in the hope of making them less unsightly. It never occurred to him that his medicine-releasing, needle-pointed syringes could be used for subcutaneous injection as well.[2]

Across the channel in the French city of Lyon, the veterinary surgeon Charles Pravez employed a piston-driven syringe of his own making to inject iron percholoride into the blood vessels of sheep and horses. Pravez was not interested in unsightly birthmarks; he was searching for an effective treatment for aneurysms (enlarged arteries, usually due to weakening of the arterial walls) that he thought could be extended to humans. Wood was the first in print – his “New Method of Treating Neuralgia by the Direct Application of Opiates to the Painful Points” appeared in the Edinburgh Medical & Surgical Journal in 1855[3] — and, shortly thereafter, he improved Ferguson’s design by devising a hollow needle that could simply be screwed onto the end of the syringe. Unsurprisingly, then, he has received the lion’s share of credit for “inventing” the modern hypodermic syringe. Pravez, after all, was only interested in determining whether iron percholoride would clot blood; he never administered medication through his syringe to animals or people.

Wood and followers like the New York physician Benjamin Fordyce Barker, who brought Wood’s technique to Bellevue Hospital in 1856, were convinced that the injected fluid had a local action on inflamed peripheral nerves. Wood allowed for a secondary effect through absorption into the bloodstream, but believed the local action accounted for the injection’s rapid relief of pain. It fell to the London surgeon Charles Hunter to stress that the systemic effect of injectable narcotic was primary. It was not necessary, he argued in 1858, to inject liquid morphine into the most painful spot; the medicine provided the same relief when injected far from the site of the lesion. It was Hunter, seeking to underscore the originality of his approach to injectable morphine, especially its general therapeutic effect, who introduced the term “hypodermic” from the Greek compound meaning “under the skin.”[4]

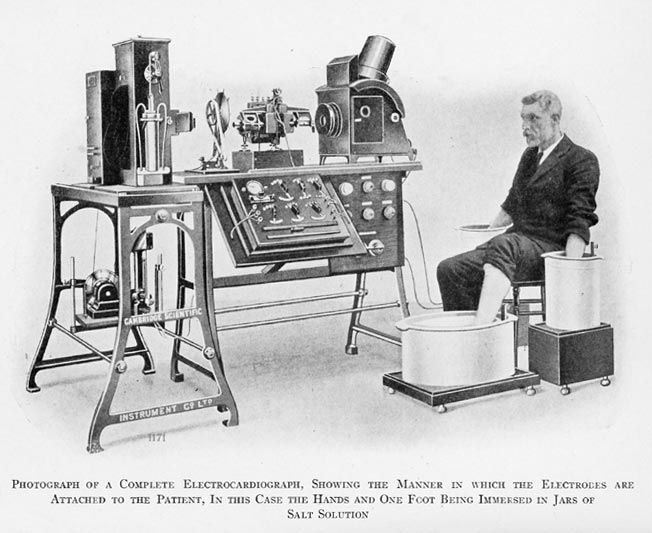

It took time for the needle to become integral to doctors and doctoring. In America, physicians greeted the hypodermic injection with skepticism and even dread, despite the avowals of patients that injectable morphine provided them with instantaneous, well-nigh miraculous relief from chronic pain.[5] The complicated, time-consuming process of preparing injectable solutions prior to the manufacture of dissolvable tablets in the 1880s didn’t help matters. Nor did the trial-and-error process of arriving at something like appropriate doses of the solutions. But most importantly, until the early twentieth century, very few drugs were injectable. Through the 1870s, the physician’s injectable arsenal consisted of highly poisonous (in pure form) plant alkaloids such as morphine, atropine (belladonna), strychnine, and aconitine, and, by decade’s end, the vasodilator heart medicine nitroglycerine. The development of local and regional anesthesia in the mid-1880s relied on the hypodermic syringe for subcutaneous injections of cocaine solution, but as late as 1905, only 20 of the 1,039 drugs in the U.S. Pharmacopoeia were injectable.[6] The availability of injectable insulin in the early 1920s heralded a new, everyday reliance on hypodermic injections, and over the course of the century, the needle, along with the stethoscope, came to stand in for the physician. Now, of course, needles and doctors “seem to go together,” with the former signifying “the power to heal through hurting” even as it “condenses the notions of active practitioner and passive patient.”[7]

The child’s fear of needles, always a part of pediatric practice, has generated a literature of its own. In the mid-twentieth century, in the heyday of Freudianism, children’s needle anxiety gave rise to psychodynamic musings. In 1958, Karl Kassowitz of Milwaukee Children’s Hospital made the stunningly commonsensical observation that younger children were immature and hence more anxious about receiving injections than older children. By the time kids were eight or nine, he found, most had outgrown their fear. Among the less than 30% who hadn’t, Kassowitz gravely counseled, continuing resistance to the needle might represent “a clue to an underlying neurosis.”[8] Ah, the good old Freudian days.

In the second half of the last century, anxiety about receiving injections was “medicalized” like most everything else, and in the more enveloping guise of BII (blood, injection, injury) phobia, found its way into the fourth edition of the American Psychiatric Association’s Diagnostic and Statistical Manual in 1994. Needle phobia thereupon became the beneficiary of all that accompanies medicalization – a specific etiology, physical symptoms, associated EKG and stress hormone changes, and strategies of management. The latter are impressively varied and range across medical, educational, psychotherapeutic, behavioral, cognitive-behavioral, relaxation, and desensitizing approaches.[9] Recent literature also highlights the vasovagal reflex associated with needle and blood phobia. Patients confronted with the needle become so anxious that an initial increase in heart rate and blood pressure is followed by a marked drop, as a result of which they become sweaty, dizzy, pallid, nauseous (any or all of the above), and sometimes faint (vasovagal syncope). Another interesting finding is that needle phobia (especially in its BII variant) along with its associated vasovagal reflex probably have a genetic component, as there is a much higher concordance within families for BII phobia than other kinds of phobia. Researchers who study twins put the heritability of BII phobia at around 48%.[10]

Needle phobia is still prevalent among kids, to be sure, but it has long since matured into a fully grown-up condition. Surveys find injection phobia in anywhere from nine to 21% of the the general population and even higher percentages of select populations, such as U.S. college communities.[11] A study by the Dental Fears Research Clinic of the University of Washington in 1995 found that over a quarter of surveyed students and university employees were fearful of dental injections, with 5% admitting they avoided or canceled dental appointments out of fear.[12] Perhaps some of these needlephobes bear the scars of childhood trauma. Pediatricians now urge control of the pain associated with venipuncture and intravenous cannulation (tube insertion) in infants, toddlers, and young children, since there is evidence such procedures can have a lasting impact on pain sensitivity and tolerance of needle picks.[13]

But people are not only afraid of needles; they also overvalue them and seek them out. Needle phobia, whatever its hereditary contribution, is a creation of Western medicine. The surveys cited above come from the U.S., Canada, and England. Once we shift our gaze to developing countries of Asia and Africa we behold a different needle-strewn landscape. Studies attest not only to the high acceptance of the needle but also to its integration into popular understandings of disease. Lay people in countries such as Indonesia, Tanzania, and Uganda typically want injections; indeed, they often insist on them because injected medicines, which enter the bloodstream directly and (so they believe) remain in the body longer, must be more effective than orally injected pills or liquids.

The strength, rapid action, and body-wide circulation of injectable medicine – these things make injection the only cure for serious disease.[14] So valued are needles and syringes in developing countries that most lay people, and even Registered Medical Practitioners in India and Nepal, consider it wasteful to discard disposable needles after only a single use. And then there is the tendency of people in developing countries to rely on lay injectors (the “needle curers” of Uganda; the “injection doctors” of Thailand; the informal providers of India and Turkey) for their shots. This has led to the indiscriminate use of penicillin and other chemotherapeutic agents, often injected without attention to sterile procedure. All of which contributes to the spread of infectious disease and presents a major headache for the World Health Organization.

The pain of the injection? Bring it on. In developing countries, the burning sensation that accompanies many injections signifies curative power. In some cultures, people also welcome the pain as confirmation that real treatment has been given.[15] In pain there is healing power. It is the potent sting of modern science brought to bear on serious, often debilitating disease. All of which suggests the contrasting worldviews and emotional tonalities collapsed into the fearful and hopeful question that frames this essay: “Will it hurt?”

[1] On the prehistory of hypodermic injection, see D. L. Macht, “The history of intravenous and subcutaneous administration of drugs,” JAMA, 55:856-60, 1916; G. A. Mogey, “Centenary of Hypodermic Injection,” BMJ, 2:1180-85, 1953; N. Howard-Jones, “A critical study of the origins and early development of hypodermic medication,” J. Hist. Med., 2:201-49, 1947 and N. Howard-Jones, “The origins of hypodermic medication,” Scien. Amer., 224:96-102, 1971.

[2] J. B. Blake, “Mr. Ferguson’s hypodermic syringe,” J. Hist. Med., 15: 337-41, 1960.

[3] A. Wood, “New method of treating neuralgia by the direct application of opiates to the painful points,” Edinb. Med. Surg. J., 82:265-81, 1855.

[4] On Hunter’s contribution and his subsequent vitriolic exchanges with Wood over priority, see Howard-Jones, “Critical Study of Development of Hypodermic Medication,” op cit. Patricia Rosales provides a contextually grounded discussion of the dispute and the committee investigation of Edinburgh’s Royal Medical and Chirurgical Society to which it gave rise. See P. A. Rosales, A History of the Hypodermic Syringe, 1850s-1920s. Unpublished doctoral dissertation, Department of the History of Science, Harvard University, 1997, pp. 21-30.

[5] See Rosales, History of Hypodermic Syringe, op. cit., chap. 3, on the early reception of hypodermic injections in America.

[6] G. Lawrence, “The hypodermic syringe,” Lancet, 359:1074, 2002; J. Calatayud & A. Gonsález, “History of the development and evolution of local anesthesia since the coca leaf,” Anesthesiology, 98:1503-08, 2003, at p. 1506; R. E. Kravetz, “Hypodermic syringe,” Am. J. Gastroenterol., 100:2614-15, 2005.

[7] A. Kotwal, “Innovation, diffusion and safety of a medical technology: a review of the literature on injection practices,” Soc. Sci. Med., 60:1133-47, 2005, at p. 1133.

[8] Kassowitz, “Psychodynamic reactions of children to hypodermic needles,” op. cit., quoted at p. 257.

[9] Summaries of the various treatment approaches to needle phobia are given in J. G. Hamilton, “Needle phobia: a neglected diagnosis,” J. Fam. Prac., 41:169-75 ,1995 and H. Willemsen, et al., “Needle phobia in children: a discussion of aetiology and treatment options, ”Clin. Child Psychol. Psychiatry, 7:609-19, 2002.

[10] Hamilton, “Needle phobia,” op. cit.; S. Torgersen, “The nature and origin of common phobic fears,” Brit. J. Psychiatry, 134:343-51, 1979; L-G. Ost, et al., “Applied tension, exposure in vivo, and tension-only in the treatment of blood phobia,” Behav. Res. Ther., 29:561-74, 1991; L-G. Ost, “Blood and injection phobia: background and cognitive, physiological, and behavioral variables,” J. Abnorm. Psychol., 101:68-74, 1992.

[11] References to these surveys are provided by Hamilton, “Needle phobia,” op. cit.

[12] On the University of Washington survey, see P. Milgrom, et al., “Four dimensions of fear of dental injections,” J. Am. Dental Assn., 128:756-66, 1997 and T. Kaakko, et al., “Dental fear among university students: implications for pharmacological research,” Anesth. Prog., 45:62-67, 1998. Lawrence Prouix reported the results of the survey in The Washington Post under the heading “Who’s afraid of the big bad needle?” July 1, 1997, p. 5.

[13] R. M. Kennedy, et al., “Clinical implications of unmanaged need-insertion pain and distress in children,” Pediatrics, 122:S130-S133, 2008.

[14] See Kotwal, “Innovation, diffusion and safety of a medical technology,” op. cit., p. 1136 for references.

[15] S. R. Whyte & S. van der Geest, “Injections: issues and methods for anthropological research,” in N. L. Etkin & M. L. Tan, eds., Medicine, Meanings and Contexts (Quezon City, Philippines: Health Action Information Network, 1994), pp. 137-8.

Copyright © 2014 by Paul E. Stepansky. All rights reserved.